Doing Anomaly Detection At Scale

- Rahul Kumar

- Aug 20, 2023

- 3 min read

How can you detect anomalies within more than one million time-series to be tracked daily?

That's a design problem. We will be discussing the challenges and how we can overcome them.

This post is primarily based on strategies to do anomaly detection at scale so we will be touching the anomaly detection strategies and algorithm on very high level.

Anomaly Detection Algorithms:

Below anomaly detection algorithms are some commonly used approaches that can identify deviations from established baselines. Examples include:

Z-Score: Measures how many standard deviations a data point is from the mean.

Isolation Forest: Constructs isolation trees to separate anomalies faster.

Autoencoders: Neural networks that learn to compress and reconstruct data. Unusual reconstructions indicate anomalies.

LSTM (Long Short-Term Memory) Networks: A type of recurrent neural network well-suited for sequential data like time-series.

So now let's discuss the bottlenecks for doing it at scale:

Desirable quality of a running system are

Low Latency

Low Cost

Trustworthy

Low Latency & Low Cost:

When you try to design a low latency system at large scale you have to overcome storage and compute related problems.

One of the common approach to deal with it is to design your system to scale horizontally (adding more machines) or vertically (upgrading existing machines) as demand increases for both storage as well as compute. This involves distributing the workload across multiple nodes to prevent bottlenecks. And eventually load balancing strategies to evenly distribute requests across available compute resources.

But these machines are expensive and limited also system should scale linearly otherwise cost would grow rapidly ultimately killing your project.

So there a tradeoff between low latency and low cost while you use above approach.

Let's look at what would be possible progress graph of a project when you would use relational database for storage of time series. We can see as we increase (scale) number of time series and the data points the bottleneck would reach.

So the question remains how would be solve this problem?

To approach the solution let's list down some scenarios:

There is no point to calculate and evaluate anomaly on batch wise manner every day as it's waste of compute resources if no one is looking at it daily.

Instead of provisioning or managing servers continuously for compute resources it's wise to provision them once you need them.

Use a low cost storage such as AWS S3 instead of relational databases.

Prediction from Models are prone to smaller errors at current timestamp neighbourhood instead if we further progress in time the error standard deviation increases so predicting at one go for large ahead timestamps is not feasible approach in terms of model efficiency.

All the above scenario if handled would lead towards a low cost and highly scalable design.

So a event based stateless system would be based suited and all above discussed scenarios can be taken care of using Serverless Architecture. it allow to focus on solving challenges particular to business, without assuming the overhead of managing infrastructure

Below units in a pipeline are major building blocks for the solution:

Serverless Compute Unit (AWS Lambda Function)

Lazy Execution ( On the fly execution)

Low cost storage i.e. Aws S3

So Low Cost and Endless Scale !!

AWS Lambda Functions

Amazon Web Services (AWS) Lambda is a serverless compute service that allows you to run code without provisioning or managing servers. It's designed to help you build scalable and cost-effective applications by executing code in response to events and triggers. It's a piece of code that you write and upload to AWS Lambda. This code performs a specific task in response to an event.These event sources can include AWS services such as Amazon S3, Amazon DynamoDB, Amazon Kinesis e.t.c

What's Serverless:

Spin up Compute only when needed

Pay only for Processing Time

Full Architecture Pipeline for Anomaly Detection:

Lambda Work Manager: Wakes up periodically check what needs to get processed as we wanted to perform lazy execution, It also creates messages in queue. Messages are nothing but information of execution.

Message Queue: it can be aws sqs service , it invokes labda worker once there is messages.

Lambda Workers: Workers simply calculates new data or perform preprocessing required on new data and use the models to do predictions and ultimately calculate anomalies and saves the data back to our storage.

Below is how Worker Unit messages and configuration looks like, Only detected data points can be stored back to relational databases such as Postgres and rest apart logs and complete results can be stored back to our chep S3 buckets.

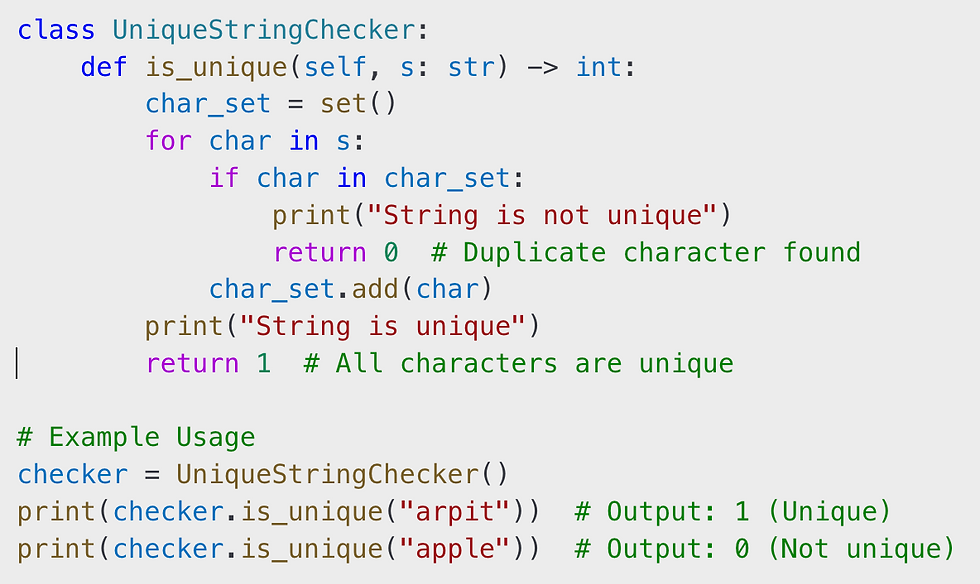

Lambda Worker Unit

Summary: We have discussed on how to design a solution for anomaly detection system with huge volume of time series at low latency as well as low cost with serverless architecture and learnt different aspects of it.

Comments